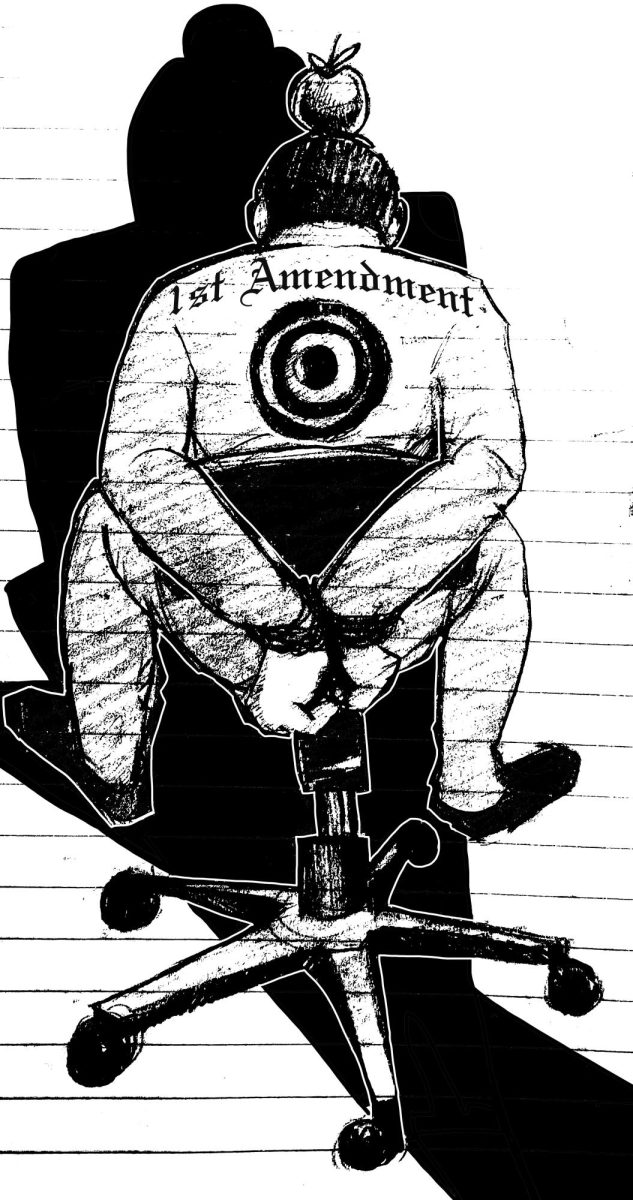

The morality of Apple’s backdoor

March 8, 2016

To unlock or not to unlock, that is the question.

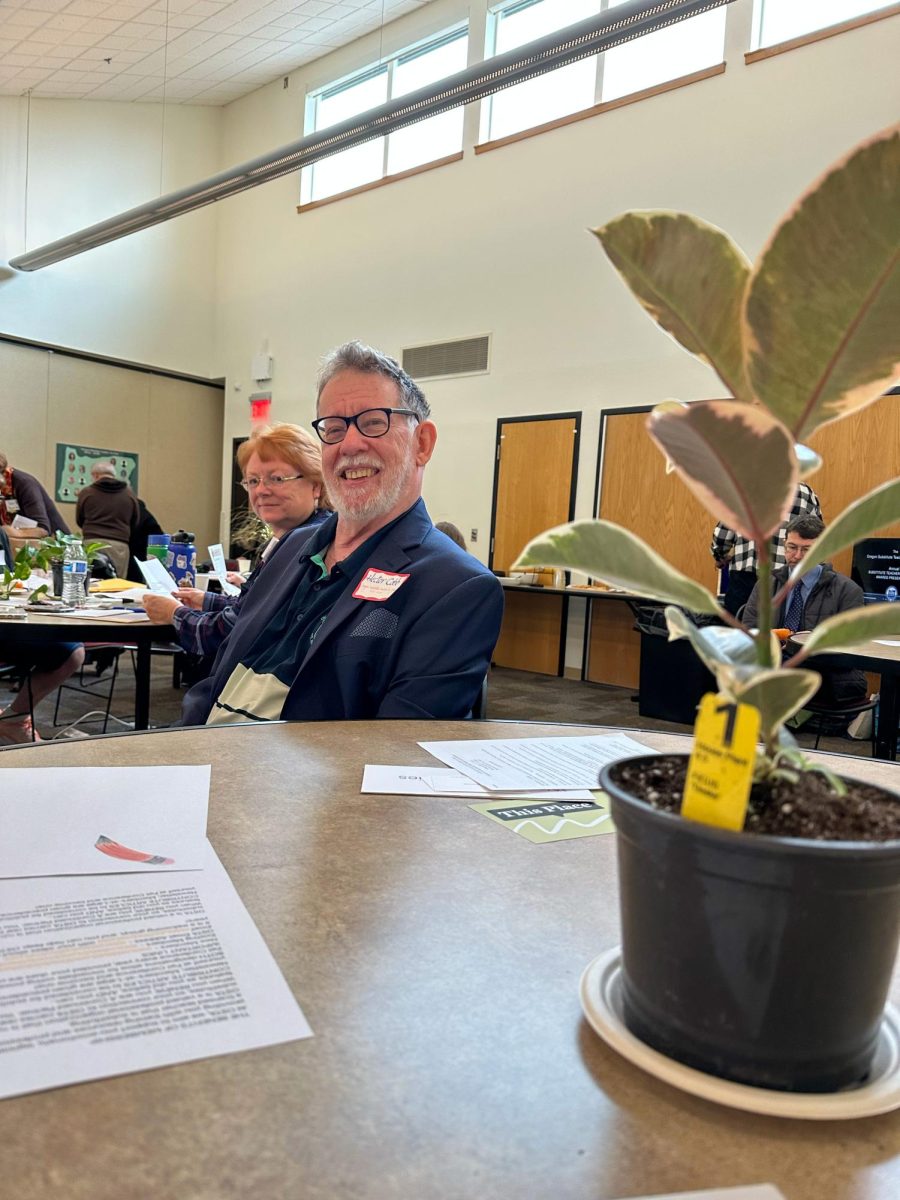

Following the San Bernardino terrorist attack, authorities recovered three cell phones belonging to the deceased shooters. Two were damaged beyond repair, while the third, a government issued Apple iPhone 5c, was found in functioning condition. The iPhone, currently untouched, is thought to hold vital information pertaining to the attack. The Federal Bureau of Investigation (FBI) theorizes that the phone may also include contacts the terrorists communicated with in the hours prior to the shooting.

It is also possible that the phone could contain plans for future attacks from associated forces.

The problem? The iPhone, protected by a short, numeric passcode, cannot be unlocked as easily as it may seem. Over the years, Apple has worked diligently to develop advanced encryption software to install into its products, including an auto-erase function that will delete all of the phone’s data after 10 incorrect passcode guesses. Authorities are currently unsure as to whether or not this safety feature has been enabled, but with 10,000 possible combinations, they are unwilling to take the risk of finding out.

In order to unlock the phone, the FBI must use software that does not currently exist. While the government has the technology to create the program, they must gain consent and access to the product with Apple’s systems. The $537 billion dollar company can also choose to ban apps that they do not want to support, which prevents the FBI from developing a tool to bypass the iPhone’s encrypted walls. Fortunately, Apple has the means and knowledge to create the “backdoor” into the iPhone. Less fortunately, Apple is unwilling to make the requested provisions.

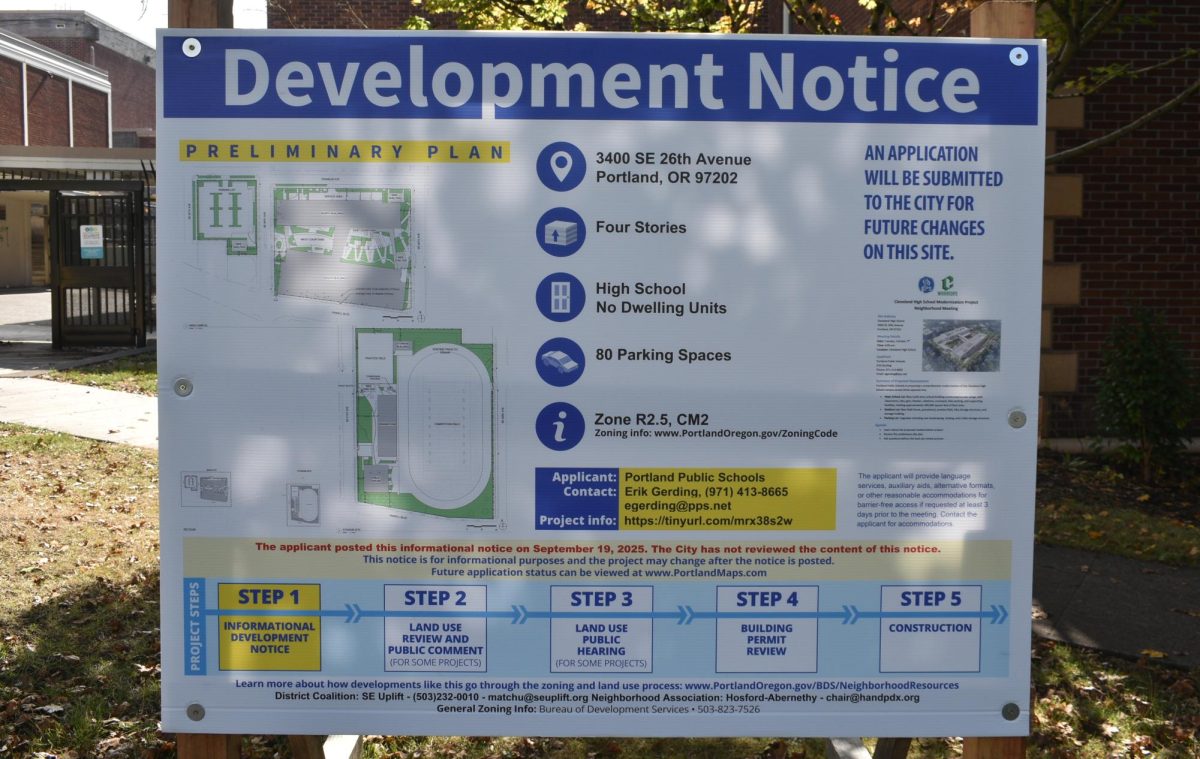

With Apple refusing to cooperate, the government opted to take the company to court. After much dispute, a California judge ruled for Apple to comply with the government’s demands. The court order requires that Apple provides “reasonable technical assistance” to the FBI, and outlines three provisions: (1) Bypassing or disabling the passcode if it has been enabled, (2) allowing the FBI to submit passcodes to the device for testing, and (3) ensuring that the software will not purposely inhibit the FBI’s attempts at unlocking the device.

Just when it appeared to have all worked out, Apple released a 1,000 word statement to the public, informing customers of its refusal to follow through with the court order. Apple argues that the software is “too dangerous” to create, and that it would “weaken security for hundreds of millions of law-abiding customers,” according to Apple’s chief executive, Timothy D. Cook. Apple claims that if the software were to get into the wrong hands, hackers and criminals could exploit the weakness in security and steal personal information from devices. In the statement, Apple also suggested a fear that if the government could demand the creation of this software, they could theoretically also order Apple to build surveillance software to intercept your messages, track your location, access your phone’s microphone or camera with your permission or knowledge, and access your medical and financial records. “It would be the equivalent of a master key, capable of opening hundreds of millions of locks,” said Cook. Of course, the device would have to be in one’s physical possession in order to access any of these functions. Apple argues that this is an overreach by the government, and that the implications of the government’s demands are “chilling.” Mark Zuckerberg, creator of Facebook, and Sundar Pichai, Google inventor voiced their support for Apple’s decision.

“It is unfortunate,” stated the Department of Justice in a news release, “that Apple continues to refuse to assist the Department in obtaining access to the phone of one of the terrorists involved in a major attack on U.S. soil.” The Department maintains that the request “presents no danger for any other phone.” Apple refuted this, stating, “While the government may argue that [the software’s] use would be limited to this case, there is no way to guarantee such control.”

This story is starting to sound like something out of a science fiction novel. It seems that there are no good options available to us—how can we possibly choose between securing our safety now or later, but not both?

Even considering the extreme measures that could be taken in the future, it is impossible to ignore the dire circumstances impending if we do not obtain the contents of the device. It is entirely possible that the phone could be wiped clean of evidence, but if it’s not, if it really does contain information about a future attack, it is too great a gamble to not take the risk.

Furthermore, if it is possible for us to create the software now, it could certainly be developed by enemy forces at any time. We need to protect ourselves with the knowledge of the software’s capabilities, and make further advances towards safeguarding our information from hackers. It’s very scary to think that my personal records could be compromised, but it is even more frightening to think that there were more mass shootings in 2015 than there were days in the year according to NBC. Furthermore, as recorded by Johnston’s Archive, nearly 3,300 people have died from terrorist-related attacks since 9/11. Having my online information hacked will not kill me. A terrorist attack, however, could.

Bottom line, this software could save lives. That’s a security we won’t compromise.